Shantanu's Blog

Database ConsultantAugust 19, 2009

Adminer Interface

It is lightweight but as powerful as it can get. It is just one .php file with NO configuration required.

wget -directory-prefix=/var/www/ -O adminer.php http://tinyurl.com/769ataj

Labels: mysql tips

Using partitions as filters

http://explainextended.com/2009/08/05/mysql-limit-on-limit/

And I just found out another practical way. Using partitions for data filtering!

I have used partitions only when there is a need to tame a huge table. But the alogrithum can also be used to speed up and simplify the complex queries. If you want to extract the data that belongs to 9 and 11 August, and process it further, why not to give this a try?

mysql> CREATE TABLE employees (separated DATETIME) PARTITION BY list (to_days(separated)) (

PARTITION p0 VALUES IN (to_days('2009-08-09')),

PARTITION p1 VALUES IN (to_days('2009-08-11'))

);

mysql> insert ignore into employees select * from t1;

Query OK, 172800 rows affected (0.92 sec)

Records: 1000000 Duplicates: 827200 Warnings: 0

Out of a million records, only 172,800 records were added to employees table those satisfied the date condition of being 9 or 11 August 09. We can now query our new table employees in order to speed up the joins and sorting.

The main disadvantage of this approach is that temporary tables can not be partitioned and therefore I can not use "create temporary table". If two threads try to work on the employees table then one of the threads will either fail or show wrong results. This trick can not be used in production, but is handy for admins.

Labels: mysql 5.1, mysql tips

August 18, 2009

MySQL Case Study - 177

Hi all, I'm kind of stuck with a little problem. I know I can create PHP functions to create the results I want, but I was wondering if their is some unique way in mysql to accomplish this.

Basically here is my query to get a list of all the ages and a count of the number of employees for that age.

PHP Code:

SELECT FLOOR((TO_DAYS(NOW())-TO_DAYS(dob))/365.25) as Age,Count(db_id) as Count

FROM tbl_shareholder_list

WHERE current_employer = 'ASRC'

AND current_employee = 1

GROUP BY Age

What I'm trying to do is get a report that lumps a range of ages. So it comes out like

AgeRange --- Count

18-25 32

26-30 45

31-35 50

And so on and so on. Is there a trick to doing this or is it going to be a mess of IF statements and subqueries?

http://forums.devshed.com/mysql-help-4/grouping-by-age-ranges-18-25-26-30-etc-628617.html

Labels: mysql case study

August 15, 2009

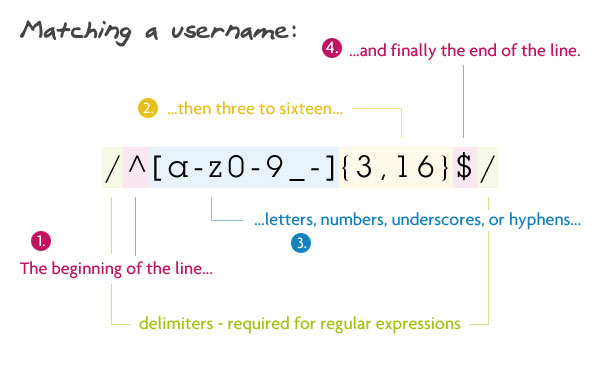

8 Regular Expressions You Should Know

Regular expressions are a language of their own. When you learn a new programming language, they're this little sub-language that makes no sense at first glance.

But with the graphical representation as above, the things become very clear.

I was looking for such an explanation for a very long time.

http://net.tutsplus.com/tutorials/other/8-regular-expressions-you-should-know/

Labels: mysql tips

August 11, 2009

More examples of Sed and Awk

$ cat awktest.txt

MainRecord1 "115494",","FAELD","CT","

MainRecord2 "245774"," ,"","Gp"

MainRecord3 "165295","Aive","AHS","S",""

MainRecord4 "256254"," MOTOR "

$ sed '/^$/q' awktest.txt

MainRecord1 "115494",","FAELD","CT","

MainRecord2 "245774"," ,"","Gp"

MainRecord3 "165295","Aive","AHS","S",""

MainRecord4 "256254"," MOTOR "

$ sed -n '/^$/q;p' awktest.txt

MainRecord1 "115494",","FAELD","CT","

MainRecord2 "245774"," ,"","Gp"

MainRecord3 "165295","Aive","AHS","S",""

MainRecord4 "256254"," MOTOR "

$ awk 'NF && $0 !~ /"Footer/' awktest.txt

MainRecord1 "115494",","FAELD","CT","

MainRecord2 "245774"," ,"","Gp"

MainRecord3 "165295","Aive","AHS","S",""

MainRecord4 "256254"," MOTOR "

$ awk '!NF { exit } 1' awktest.txt

MainRecord1 "115494",","FAELD","CT","

MainRecord2 "245774"," ,"","Gp"

MainRecord3 "165295","Aive","AHS","S",""

MainRecord4 "256254"," MOTOR "

$ awk ' NF {print} !NF {exit}' awktest.txt

MainRecord1 "115494",","FAELD","CT","

MainRecord2 "245774"," ,"","Gp"

MainRecord3 "165295","Aive","AHS","S",""

MainRecord4 "256254"," MOTOR "

_____

// to select record in the range of 00 to 04

$ cat awktest.txt

date 18:00:00

date 18:01:02

date 18:02:00

date 19:06:00

date 18:03:00

date 18:05:00

$ awk '{print $2}' | awk -F ":" '{if ($2<=4) print $0}' < awktest.txt

date 18:00:00

date 18:01:02

date 18:02:00

date 18:03:00

_____

$ cat file1.txt

abc|0|xyz

123|129|opq

def|0|678

890|pqw|sdf

// print record where second column has value of 0

$ awk -F'|' '$2=="0"' file1.txt

abc|0|xyz

def|0|678

_____

$ cat file1.txt

abc|0|xyz

123|129|opq

def|0|678

890|pqw|sdf

// replace the character 'a' with Apostrophe

$ sed -e "s/a/'/" file1.txt

'bc|0|xyz

123|129|opq

def|0|678

890|pqw|sdf

$ tr a "'" < file1.txt

'bc|0|xyz

123|129|opq

def|0|678

890|pqw|sdf

_____

$ cat infile.txt

|A|21|B1||1.1|

|A|21|C|RAGH|1.1|

|A|21|D1||1.1|

|A|21|C|YES|1.1

// replace blank cells with "NA"

$ awk 'BEGIN { FS="|"; OFS="|" } { if ($5=="") $5="NA"; print }' infile.txt

|A|21|B1|NA|1.1|

|A|21|C|RAGH|1.1|

|A|21|D1|NA|1.1|

|A|21|C|YES|1.1

$ awk -F"|" '$5 == "" {$5="NA"; print; next} {print}' OFS="|" infile.txt

|A|21|B1|NA|1.1|

|A|21|C|RAGH|1.1|

|A|21|D1|NA|1.1|

|A|21|C|YES|1.1

$ perl -ne '{s/(?<=\|)(?=\|)/NA/g;print;}' infile.txt

|A|21|B1|NA|1.1|

|A|21|C|RAGH|1.1|

|A|21|D1|NA|1.1|

|A|21|C|YES|1.1

Labels: unix case study

UNIX case study - 21

How do I add a counter for the duplicate vlaues?

$ cat mysort.txt

yan

tar

tar

man

ban

tan

tub

tub

tub

$ awk '{print $1,word[$1]++}' mysort.txt

yan 0

tar 0

tar 1

man 0

ban 0

tan 0

tub 0

tub 1

tub 2

Labels: unix case study

UNIX case study - 20

$ cat mysort.txt

004002004545454000001

041002004545222000002

006003008751525000003

007003008751352000004

006003008751142000005

004001005745745000006

$ sort -k 1,5 mysort.txt

004001005745745000006

004002004545454000001

006003008751142000005

006003008751525000003

007003008751352000004

041002004545222000002

I want to sort the file according to position 1-5 and secondary sort by the last position of file 16-21

the result should be like this (file2) :

004002004545454000001

004001005745745000006

006003008751525000003

006003008751142000005

007003008751352000004

041002004545222000002

http://www.unix.com/shell-programming-scripting/115911-sort-text-file.html

Ans:

sort -k 1.1,1.5 -k 1.16,1.21 mysort.txt

Labels: unix case study

UNIX case study - 19

I mainly work with altering columns with awk but now I encountered a problem with dealing with rows.

So what I want to do is only print rows that start with a specific name. For example:

## joe jack john

ty1 3 4

ty1 5 6

ty2 4 7

tym 5 6

tyz 7 9

Basically what I want to do is get rid of the row with ## and tym and tyz. So I only want to print ty1, and ty2

So the output will look like this:

ty1 3 4

ty1 5 6

ty2 4 7

http://www.unix.com/shell-programming-scripting/116087-only-printing-certain-rows.html

Labels: unix case study

UNIX case study - 18

I have a data as follow:

1 400

2 239

3 871

4 219

5 543

6 ...

7 ...

.. ...

.. ...

99 818

100 991

I want to replace the sequence number (column 1) that start from 150. The output should like this:

150 400

151 239

153 871

154 219

155 543

...

...

Can anyone tell me AWK code for this....

http://www.unix.com/shell-programming-scripting/116062-changing-sequence-number.html

Labels: unix case study

August 10, 2009

MySQL Case Study - 176

I have a string:

$string = "12,15,22";

Each of these values represents a usrID and I'd like to use these to select the rows associated with the $string.

Something like this:

SELECT usrFirst, usrLast FROM tblusers WHERE usrID = 12 OR 15 OR 22.

Now, the $string changes all the time, and sometimes can be a different number of ID's. Eg., sometimes 3, sometimes 4.

http://stackoverflow.com/questions/1225658/php-mysql-use-string-to-select-from-database

Labels: mysql case study

MySQL Case Study - 175

I want to match a String which looks like this:

[lang_de]Hallo![/lang_de][lang_en]Hello![/lang_en]HeyHo[lang_es]Hola![/lang_es]

I want the matching to return true if there is text which is not enclosed by lang_tags (in this example: HeyHo). It could also be positioned at the beginning or end of the string.

Whitespace should NOT match, e.g. [lang_de]Tisch[/lang_de] [lang_en]Table[/lang_en] should not cause a match.

I can't use lookahead or lookback, because MySQL doesnt seem to support this.

Any Suggestions?

http://stackoverflow.com/questions/1224723/need-regexp-matching-in-mysql

Labels: mysql case study

August 03, 2009

weekly group by

SELECT t1 . * , WEEK( t1.tradingday ) wk

FROM daily_prices t1

LEFT

JOIN daily_prices t2 ON week( t1.tradingday ) = WEEK( t2.tradingday )

AND t1.tradingday > t2.tradingday

WHERE t2.securityid IS NULL ;

Pretty Interesting!

http://forums.mysql.com/read.php?10,273597,273638#msg-273638

Labels: mysql case study

August 01, 2009

Rollback to MyISAM?

When I tried to insert 3, 4, 1 and 5 in a myisam table, it accepted 3 & 4 but failed on 1. We lost the last record, 5

In case of InnoDB, it rejected the entire statement and hence 3 & 4 were not added to the table.

Great.

But what happens when I kill / cancel the statement using Ctrl + C as I always do?

As per the bug 45309, even the InnoDB tables are partially populated just like MyISAM tables!

http://bugs.mysql.com/bug.php?id=45309

I guess unless you are using foreign keys, it is better to use the MyISAM tables.

_____

mysql> create table test_myisam (i int primary key) engine=myisam;

Query OK, 0 rows affected (0.19 sec)

mysql> create table test_innodb (i int primary key) engine=innodb;

Query OK, 0 rows affected (0.18 sec)

mysql> insert into test_myisam values (1),(2);

Query OK, 2 rows affected (0.19 sec)

Records: 2 Duplicates: 0 Warnings: 0

mysql> insert into test_innodb values (1),(2);

Query OK, 2 rows affected (0.18 sec)

Records: 2 Duplicates: 0 Warnings: 0

mysql> insert into test_myisam values (3),(4),(1),(5);

ERROR 1062 (23000): Duplicate entry '1' for key 'PRIMARY'

mysql> select * from test_myisam;

+---+

| i |

+---+

| 1 |

| 2 |

| 3 |

| 4 |

+---+

4 rows in set (0.18 sec)

mysql> insert into test_innodb values (3),(4),(1),(5);

ERROR 1062 (23000): Duplicate entry '1' for key 'PRIMARY'

mysql> select * from test_innodb;

+---+

| i |

+---+

| 1 |

| 2 |

+---+

2 rows in set (0.18 sec)

Labels: mysql tips

Auto incremented primary key

create table test_me (

id int auto_increment not null,

xx varchar(9),

key(id),

primary key (xx));

Contrary to my belief, id int not null auto_increment need not be a primary key!

I can declare it as a key and assign another column the primary status. I don't know where I will use this, but it helps knowing the possibilities. May be to avoid replication conflict.

Labels: mysql tips

Archives

June 2001 July 2001 January 2003 May 2003 September 2003 October 2003 December 2003 January 2004 February 2004 March 2004 April 2004 May 2004 June 2004 July 2004 August 2004 September 2004 October 2004 November 2004 December 2004 January 2005 February 2005 March 2005 April 2005 May 2005 June 2005 July 2005 August 2005 September 2005 October 2005 November 2005 December 2005 January 2006 February 2006 March 2006 April 2006 May 2006 June 2006 July 2006 August 2006 September 2006 October 2006 November 2006 December 2006 January 2007 February 2007 March 2007 April 2007 June 2007 July 2007 August 2007 September 2007 October 2007 November 2007 December 2007 January 2008 February 2008 March 2008 April 2008 July 2008 August 2008 September 2008 October 2008 November 2008 December 2008 January 2009 February 2009 March 2009 April 2009 May 2009 June 2009 July 2009 August 2009 September 2009 October 2009 November 2009 December 2009 January 2010 February 2010 March 2010 April 2010 May 2010 June 2010 July 2010 August 2010 September 2010 October 2010 November 2010 December 2010 January 2011 February 2011 March 2011 April 2011 May 2011 June 2011 July 2011 August 2011 September 2011 October 2011 November 2011 December 2011 January 2012 February 2012 March 2012 April 2012 May 2012 June 2012 July 2012 August 2012 October 2012 November 2012 December 2012 January 2013 February 2013 March 2013 April 2013 May 2013 June 2013 July 2013 September 2013 October 2013 January 2014 March 2014 April 2014 May 2014 July 2014 August 2014 September 2014 October 2014 November 2014 December 2014 January 2015 February 2015 March 2015 April 2015 May 2015 June 2015 July 2015 August 2015 September 2015 January 2016 February 2016 March 2016 April 2016 May 2016 June 2016 July 2016 August 2016 September 2016 October 2016 November 2016 December 2016 January 2017 February 2017 April 2017 May 2017 June 2017 July 2017 August 2017 September 2017 October 2017 November 2017 December 2017 February 2018 March 2018 April 2018 May 2018 June 2018 July 2018 August 2018 September 2018 October 2018 November 2018 December 2018 January 2019 February 2019 March 2019 April 2019 May 2019 July 2019 August 2019 September 2019 October 2019 November 2019 December 2019 January 2020 February 2020 March 2020 April 2020 May 2020 July 2020 August 2020 September 2020 October 2020 December 2020 January 2021 April 2021 May 2021 July 2021 September 2021 March 2022 October 2022 November 2022 March 2023 April 2023 July 2023 September 2023 October 2023 November 2023 April 2024 May 2024 June 2024 August 2024 September 2024 October 2024 November 2024 December 2024 January 2025 February 2025 April 2025 June 2025 July 2025 August 2025 November 2025